Welcome to part 3 of a multiple part course on passing your AWS Architect, Developer & Sysops Associate exams. The best part…this course is totally free of charge!

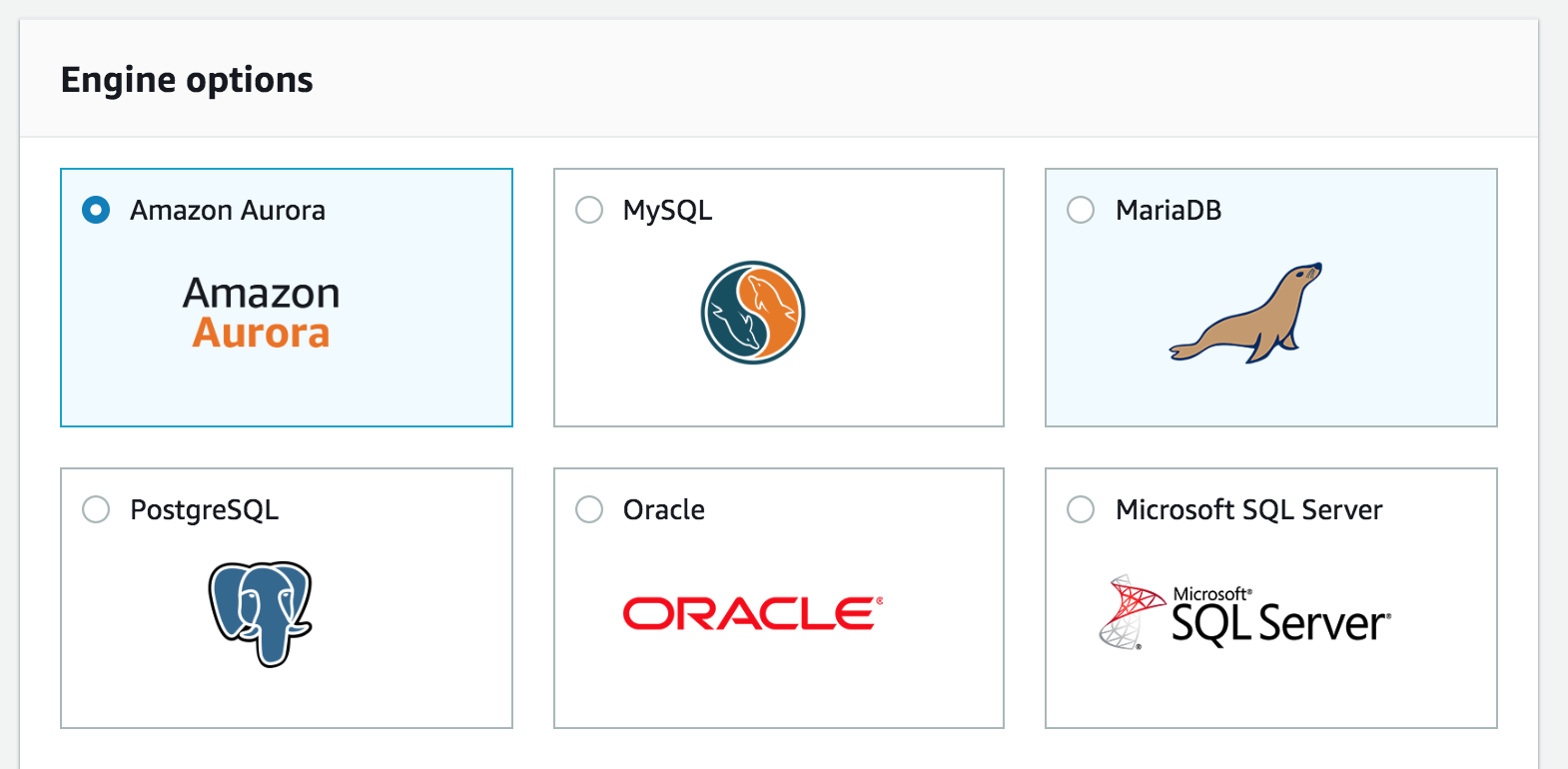

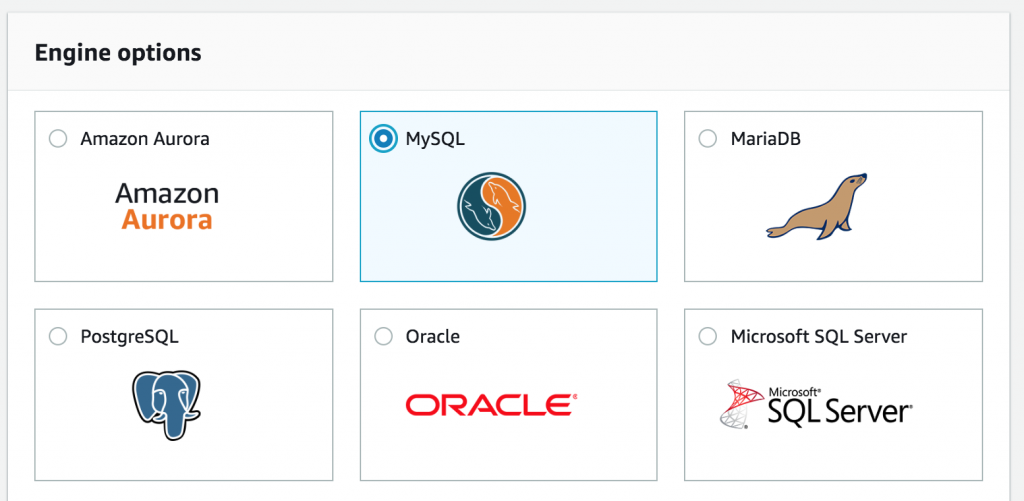

In this article we will look at Amazon’s Relation Database Service (RDS for short). RDS allows user to create relational databases hosted in the cloud for various vendors including Oracle, MySQL, MSSQL and AWS’s proprietary database Aurora.

It is guaranteed to appear in all the associate exams.

The article will take just 15 minutes to read and I’ve included a few realistic exam questions around RDS scenarios at the end of the article as a bonus.

Who should read this?

If you are studying for the:

- AWS Associate Architect

- AWS Associate Developer

- AWS Associate SysOps

Exams or you are using AWS and want to learn more about AWS RDS then this is the article for you.

The basics of Relational Databases

Ok so if you’re reading an article about passing the AWS associate certifications then it’s probably fair to assume that you have some understanding of what a relational database is.

- Let’s not spend too much time going over ground that is already well trodden. So let’s quickly summarise relational databases.

- They are the most popular form of database technology

- They have been used widely throughout the industry for decades

- Their best practices and limitations are well understood

- Typically they are composed of Tables

- Those tables are broken down into rows and columns

- Oracle, PostgreSQL, MySQL, MSSQL, Aurora are all examples of relational database

- They tend to be schema based

What is AWS RDS?

AWS RDS is a cloud hosted highly scalable relational database platform. You can use RDS to host many of the popular third party relation database solutions such as MySQL, Oracle, PostgreSQL, MSSQL & MariaDB.

RDS allows users to easily and quickly create read replica sets of your data and has sophisticated tools for creating backups.

For me it takes a lot of the guesswork out of scaling and managing databases. In the past, you had to worry about a whole bunch of things, like table size management, ram and processor resource allocation.

Companies typically corden off their databases to database administrators. Then developers, testers etc.. will have to liaise with DBAs to gain access to those database. Which makes sense for production databases that are running live data.

However for developers like me, typically I’m working on a development database. That customers will never go near. You’ll find that these also fall within the domain of the DBA’s.

This adds a very real layer of RED TAPE for day to day development. As you’ll often find that DBA time isn’t always available when you need it. And given their natural disposition to restrict access to databases. It can be a real pain getting the access rights required to work on a DB.

AWS RDS (Relational Database Service) does away with all this. I can quickly and easily spin up database instances. Experiment with clustered configurations and generally cut out the middleman to streamline my workflow

I realise this situation doesn’t apply to all organisations. It’s just a personal experience and a little rant 🙂

Anyway on with the show!

Setting up an RDS instance in 10 easy steps

So the first thing you’ll probably want to know is how to create a relational database on AWS RDS.

Never fear! This is a really simple process. Here’s a really quick guide to creating a RDS database.

Step 1: Click on RDS from Management Console

Step 2: Click Launch Instance

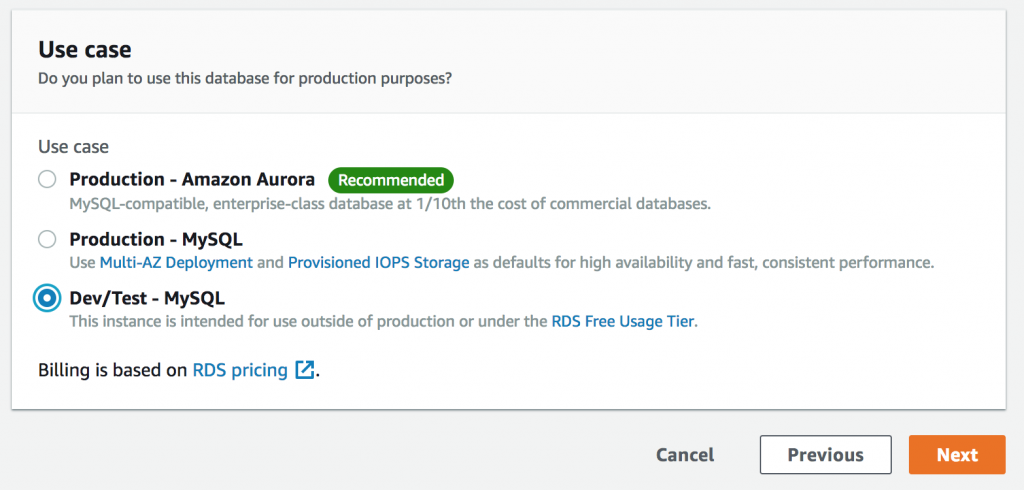

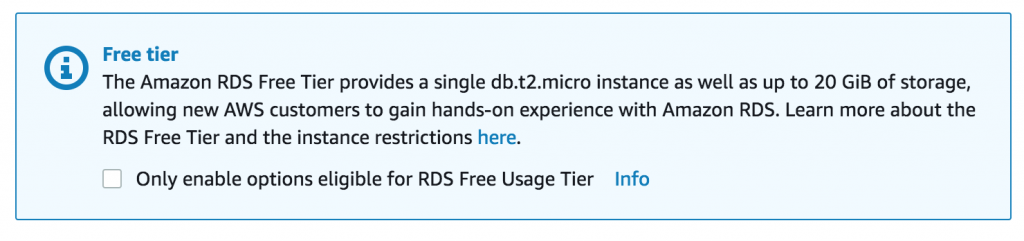

Step 3: Select MySQL from the list of database providers. MySQL offers a free tier option. You shouldn’t be charged for it.

Step 4: On next page leave all settings as default, you won’t be tested on these during the exam.

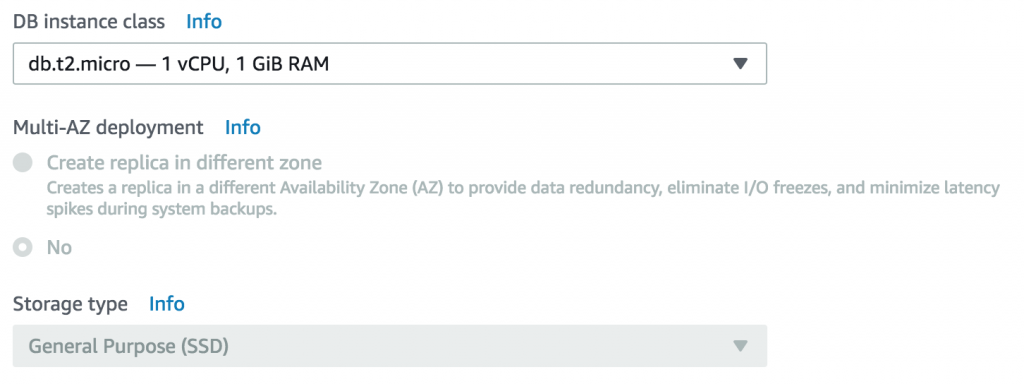

Step 5: Select the T2Micro instance type as this is covered under the free tier.

Step 6: Don’t worry about Multi AZ for now, it’s covered in the Multi AZ’s in 3 minutes section later on.

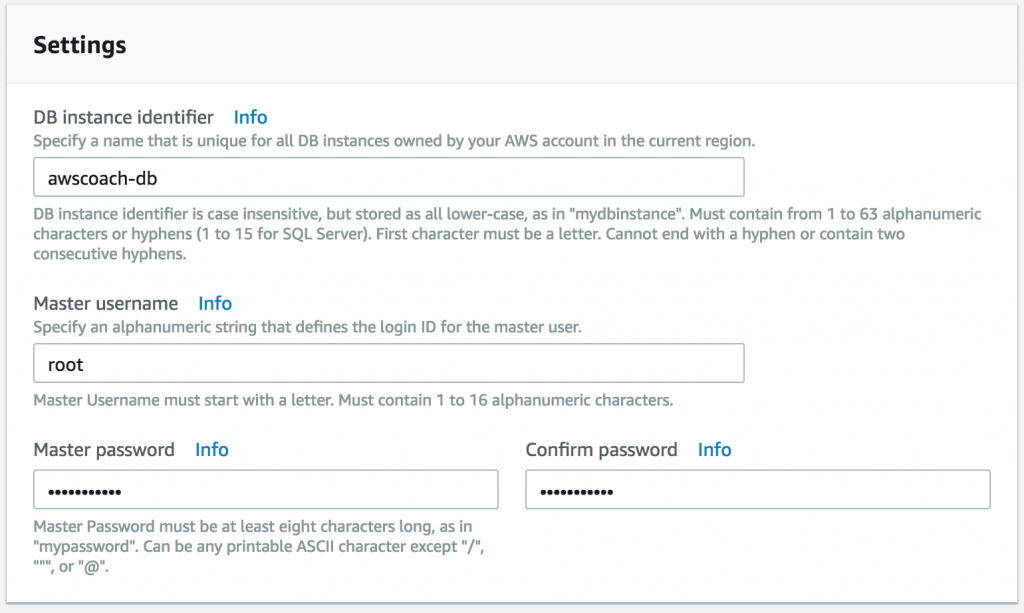

Step 7: Name database instance and enter a username and password

Step 8: Deploy into default VPC and default subnet group, we’ll go into detail about how to setup a VPC in the next blog post, so don’t worry if this seems a little foggy. All you need to know at this point is a database must be assigned to a VPC.

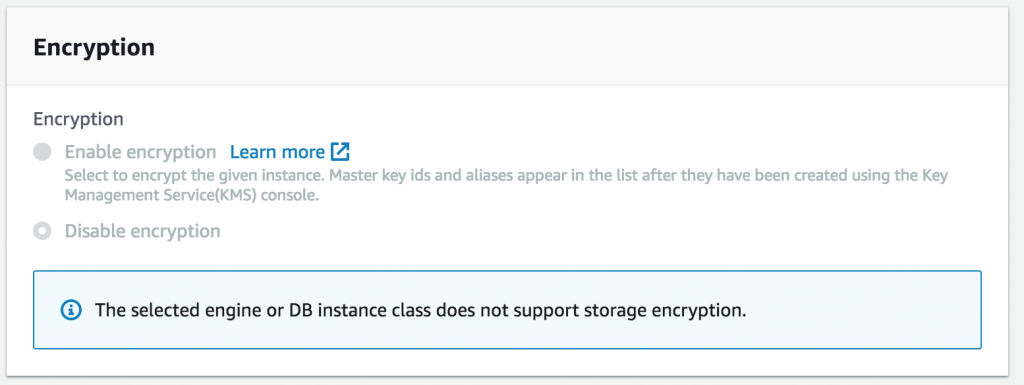

Step 9: Enter a database name and Ignore encryption.

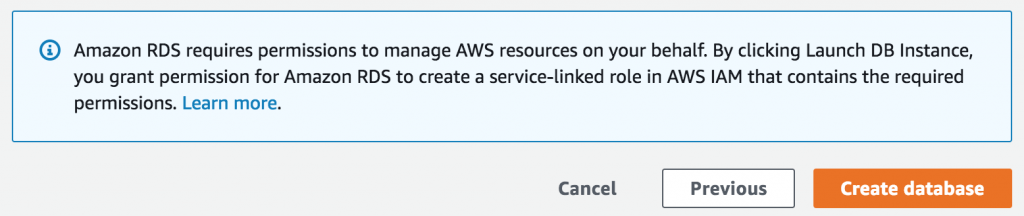

Step 10: Launch my instance – takes a few minutes 5-10 mins

That’s it, you’ve successfully created a database on AWS RDS!

Exam Tip: You are never given a public IP4 address for endpoint, instead you are always given a DNS address.

A little more info on Virtual Private Clouds (VPC)

Although you don’t need to be a VPC wizz in relation to databases. You will be asked scenario questions that include RDS and VPC. The one I see more often than not is:

If you have a database in one VPC and and an EC2 instance in another. Can the EC2 instance access the database?

Well the answer is a resounding NO

In order to allow access to the database, we would need to do the following:

Select security group for the RDS dashboard – security group section – Inbound traffic – allow inbound 3306 add the name of the server and then that EC2 instance can access that database.

Now your EC2 instance will be able to access the database in the separate VPC.

Should I study DynamoDB for the exam?

In short, if you are studying for the Architect Associate exam then you only need a high level understanding of DynamoDB. If however you are studying for the developer & sysops exam then you’ll need to understand it to a detailed level.

I’m going to write an in-depth article specifically on DynamoDB soon, so check that out if you want to know more. I’ll add a link under the “Courses” section.

For now, let’s take a high-level look at DynamoDB:

A DynamoDB database consists of JSON based set of collections, documents and key value pairs. This differs from the concept of relational databases. However, to help relate, you can think of collections as the equivalent of tables, documents the equate to rows in a table and key value pairs would be columns in those rows.

Where it starts to separate from the relational paradigm is that the schema for documents is not fixed!

What does this mean?

Let’s take an example. Suppose we have a document for storing person records.

{

“name” : “Stephen”,

“age” : 32

}

This would live in the person document and with a traditional RDS the fields would be fixed to name and age. Each new entry would require those fields being included even if the value was null.

If we wanted to add another field, say surname. Then we would have to add that field to every record in that table.

With a NoSQL database like DynamoDB this isn’t a problem! You can have records in your documents with completely different key value pairs!

So

{

“name” : “Stephen”,

“age” : 32

}

Can live perfectly fine with

{

“name” : “Stephen”,

“age” : 32,

“surname” : “Foster”

}

Exam tip: Appears massively in the developer associate exam. For the Architect Associate you only need to know DynamoDB at a high level.

DynamoDB features

- DynamoDB is a NoSQL database.

- Can provide single digit latency at any scale

- Flexible non-schema-based model that means it’s well suited for changing data models.

- Popular with use in mobile, web, gaming, ad-tech, IoT applications.

- Stored on SSD storages for fast response times

- Spread across 3 geographic distinct data centres (3 different facilities)

- Scales easily and much EASIER than an RDS database. It is called push button scaling.

DynamoDB read types

There are 2 modals for reading data from a DynamoDB database.

Eventual consistent reads – consistent reads across all copies of data is usually reached within a second. Repeating a read after a short time should return the updated data. (best read performance)

Strongly consistent reads – A strongly consistent read return as a result that reflects all writes that received a successful response prior to the read.

How to understand RedShift for the exam

Redshift is AWS’s data warehouse solution. Used primarily for running complex queries on massive amounts of data.

It is an online analytical processing solution (OLAP). That costs less than a 10th of the cost of traditional solutions. Which scales up to the petabytes.

A common use case would be something along the lines of, a company that has offices in multiple countries wants to compares sales of its latest product across the globe. To do this they have to take many factors into account. For instance, the currencies of where the product is sold. The number of outlets per country. The dates the product was released per country. The list goes on.

Building and running a complex query like this on your live database is likely to slow down performance. That’s where RedShift comes into play.

So let’s look at some of the features of RedShift

A single RedShift node is 160GB in size. You could run a smaller companies data analysis on a single node.

RedShift can also be setup to use multiple nodes. This scenario is more suited for massive multinational companies that need huge amounts of data analysed.

Multi Node configurations are composed of:

- Leader Node (manages client connections and reviews queries)

- Compute Node (store data and perform queries and computations) upto 128 compute nodes

RedShift doesn’t store data like a traditional RDS

Rather than using row based storage, RedShift uses columnar storage. This is where data is stored in vertical columns. Advantages of this approach include:

Faster queries around 10 times faster since when performing queries on columnar data stores the adjacent row data isn’t included.

Data can be compressed much more effectively since the data is stored in columns we know that the data type is the same for each data value.

Massive parallel processing MPP – means redshift can distribute query load across all nodes evenly. Meaning that even if your data grows over time, you’re queries should still perform well.

Wrapping up RedShift

Redshift is only available in 1 AZ, which makes sense since it’s not the same as a production database that needs to be highly available and running 24/7. Redshift is more suited for management level tasks such as analysing sales figures for products

Pricing is based per compute nodes, just like all AWS services you pay only for what you use. The pricing structure is 1 unit per node per hour. Where you have a multi node setup, you would not be charged for the leader node.

Security data is encrypted in transit and encrypted at rest AES 256 encryption. Keys are by default managed for you.

Exam tips

Redshift – database warehousing service

It is very fast

It is very cheap relative

It is on of AWS most popular services

Elasticache explained

Elasticache is AWS’s caching service. It’s primarily used to improves performance of web applications by allowing retrieval of information from in memory cache instead of hitting slower disk databases.

In terms of the exams, it will appear massively in the developer associate exam. For the architect associate you’ll only need to know it at a low level. I’m planning on writing a more in depth article focussing exclusively on elasticache in the future. Checkout the course section to find it.

So…

The main things you need to know are that there are 2 types of caching supported by Elasticache.

Memcached – widely adopted – memcache is compliant.No mutlii AZ support.

Rediss – open sourced, open sets and lists, multi AZ supported.

It allows to deploy, operate and scale an in memory cache in the cloud.

Where does Elasticache make sense?

Any situation where you are retrieving lots of data that doesn’t change that often. Such as for instance Forums or gaming websites where you have a lot more reads than you would writes. Would benefit from a caching solution.

This is because that data will be retrieved from in memory rather than slower disk reads.

Exam tips:

You’re likely to be asked scenarios around situations where a website is under performing and then you’ll be asked to suggest an AWS service to improve it. You’ll be given options like Elasticache, read replicas and redshift and you will have to chose the most appropriate one.

AWS Aurora is potentially going to show up

Whether you’re learning for the exam or just want to become more knowledgeable of AWS RDS, then you simply NEED to know AWS Aurora.

So what is Aurora?

Aurora is a bespoke database created by Amazon. It combines the speed of a commercial database such as Oracle database and the simplicity MySQL an open source database.

Amazon claims that in terms of performance, Aurora is 5 times faster than MySQL and is intended to challenge Oracles market dominance.

It is designed to be compatible with existing MySQL database. While being designed to be highly available out of the box (something Oracle will charge massively for)

Aurora Features

Aurora databases are built on 10 GB blocks. If your database starts to exceed the 10 GB limit then another 10 GB block is automatically allocated. Aurora will continue this until the absolute limit of 64 terabytes!

In addition the instances that the database is running on can scale up to 32 virtual CPU’s and 244 GB or ram, so you’re unlikely to hit those limits any time soon!

Maintains 2 copies of your database in each availability zone, with a minimum of 3 availability zones. In plane english this means you get 6 copies of your data.

Which results in Aurora being able to handle the loss of up to 2 copies of your data without affecting database write availability and upto 3 copies with affecting read availability. This high availability out of the box is something that separates Aurora from the other big players such as Oracle or MSSQL.

One of the interesting things about Aurora that I found is that it is self healing, so if there’s a problem with one of the data blocks then it will fix them automatically. Again this is unlikely to show up in the exam, but is interesting to note.

Aurora read replicas

For Aurora there are 2 types of read replicas:

- Aurora replicas – essentially Aurora duplicate database (currently upto 15)

- MySQL Read Replicas (currently 5)

Pricing – Aurora is not free tier so if you’re thinking of trying out Aurora then I’d suggest setting up an instance, using it for a short time experimenting and such. Afterwards shut down the delete the database. This way you’ll not be met with a nasty charge at the end of the month.

Nb it can take a while for an instance to boot up.

Why is it more expensive? Well that’s because Aurora requires a larger EC2 instance size to run and the usage cost for those is more.

Exam Tip: Can create aurora replicas in different zones if you want.

Exam Tip: AWS push you to create Aurora instead of MySQL

Aurora Summary

- 2 copies of data in each AZ, with min of 4 AZs so 6 copies of your data

- Supported by read replicas

- Can handle 2 data node losses and still function for effect writes

- Can handle 3 data node losses while maintaining effective reads.

- Supports up to 15 aurora replicas

- Supports up to 5 MySQL replicas

- 1 – 35 day backup period

- Encrypted at REST

- Runs on Virtual machine – single point of failure. Although the data is replicated, the instance only running on single node.

A database without backups is no database

This wouldn’t be a complete course on databases if it didn’t include a section on backups.

Every database is going to need to be backed up and backed up often!

For AWS RDS there are 2 types of backups:

Automated backups

This is where AWS will automatically backup your database and hold it for a specified retention period. Typically that can be set for between 1-35 days.

In addition to the backups, AWS will also keep a transaction logs and store it. When a database is restored from a backup, the transaction log is applied to it and then the database restore is complete.

Exam Tip: When restoring a database a new dns endpoint is created.

By default AWS will enable backups and chose the most recent backup and transaction logs.

Those backups are stored on AWS S3, you get free storage equal to the size of your database. So you won’t be charged twice.

When those backups occur IO might be suspended for the database, this can be mitigated with read replicas though. It’s worth noting that this isn’t an issue if you use DynamoDB instead.

If you delete the database then the automated backups go with it so keep this in mind.

Database snapshots

With snapshots, the main differences are that you would create a snapshot manually.

They outlive the database itself. What this means is if you deleted the database then the snapshots would remain and you could then restore the database from them. Where as the automatic backups would be removed.

Database Encryption

Encryption at rest is supported for MySQL, Oracle, MSSQL, PostGreSQL, MariaDB & Aurora. Done using KMS. After encrypting a database, any backups, snapshots etc.. will also be encrypted.

You cannot encrypt an existing database. In order to encrypt an existing database you must make a copy of that database and then encrypt that. – this is an exam tip!

Just what is Read Replicas in AWS?

Most of the time, you’ll find that applications/websites/games etc… that backed by a database will experience far more read requests than writes.

This makes sense when you think about it, because more people consume content than create it.

AWS recognises this and has create the concept of a read replica for RDS. Essentially a read replica is a duplicate of your database this is only used for read requests.

Some of the features of read replicas include:

The ability to have read replicas in different availability zones or regions ( a new feature as of 2018) Although this is unlikely to come up in the exam.

Read replicas can be promoted to become their own databases. This will break the replication however.

You can have upto 5 read replicas of your database. Meaning that at anyone moment in time, when a user makes a request that data could be retrieved from any of those read replica databases.

It’s important to understand that read replicas are a performance enhancing option. Where as Multi Availability Zones are not. This is something that can come up in the exam and understand the difference it good to know.

Exam Tip: Oracle does not support read replicas

Multi Availability Zones in 3 minutes

Having a clear understanding of the difference between multi AZ and read replicas is definitely going to show up in the exam. So it’s important that we cover this ground. Although don’t worry as we only need to understand availability zones at a high level.

So let’s keep this brief:

The purpose of multi availability zones is for disaster recovery.

For instance, if your primary database goes down, then you can switch to another copy of the database running in the background in another AZ.

Currently supported for MySQL, Oracle, MSSQL, PostGreSQL, MariaDB.

Let’s review what we’ve learned

Ok few, we’ve got there. You’ve pretty much covered all you’ll need to know about AWS RDS. So let’s quickly review what we’ve learnt.

AWS RDS is a online transaction processing (OLTP) solution. Supporting multiple third party database vendors including Oracle, MSSQL, MySQL, MariaDB and Postgres.

AWS also supports, a NoSQL solution in the form of DynamoDB. Which supports push button scaling and can handle massive amounts of read request.

- Push button scaling on the fly with no down time

- RDS scaling is harder- generally need to shutdown the database for upgrading

- Stored on SSD storage

- Run on 3 different geographic locations

- Strongly consistent reads

- Eventually consistent reads (default)

Redshift is AWS’s data warehouse solution. Used primarily for running complex queries on massive amounts of data.

- Single node 160gb

- Multi Node – leader node – compute nodes 128

- Costs 1 10th of the price of traditional data warehouse solutions

- Extremely efficient query performance

- Scales to multinational size companies

Elasticache is AWS’s caching service. It’s primarily used to improves performance of web applications by allowing retrieval of information from in memory cache instead of hitting slower disk databases.

- Supports Memcache

- Supports Redis

- Improves performance of websites – data stored in memory

- Exam will be scenario based questions for instance: Choose between dynamoDB and RDS for instance Database is running really slowly, what can you do, use memcache, read replicas etc..

Multi AZ – The purpose of multi availability zones is for disaster recovery.

- Replicated database across multiple availability zones

- Can simulate failover by rebooting primary instance

Read Replica are read only copies of your database. They are designed to reduce stress on the primary database by handling read requests exclusively.

- DB instances sit behind an elastic load balancer

- Can create a read replica of a production database

- Generally used for frequent number of reads

- Upto 5 read replicas

Aurora is AWS’s in house relational database implementation. It is MySQL compatible and yet outperforms it by 5 times. Making it a true competitor to the enterprise level databases such as Oracle and MSSQL.

- 2 copies of data in each AZ, with min of 4 AZs so 6 copies of your data

- Supported by read replicas

- Can handle 2 data node losses and still function for effect writes

- Can handle 3 data node losses while maintaining effective reads.

- Supports upto 15 aurora replicas

- Supports upto 5 MySQL replicas